What’re you lookin’ at, ChatGPT?

Answer: We don’t really know. But we have a pretty good idea…

The Concept of "Attention" in Deep Learning

Deep learning, in all its glory and jargon, is a bit like a precocious toddler—eager to learn and incredibly sharp, but still occasionally drooling all over a Backyardigans toy. The concept of "attention" in language models isn’t that different from a cavorting kiddo picking out the most exciting toy from a pile; the coolest, the hippest, the…It’s Uniqua. It’s always gonna be Uniqua. (It’s true, I’m a Gen-X dad.)

But why, oh why, would a machine need to pay "attention"? Well, for the same reasons we do: to filter out the mundane, focus on the crucial stuff, and not get overwhelmed.

So, how does attention make ChatGPT perform so well when it comes to sequence-to-sequence tasks—like translating English to Klingon, or helping you cheat on write your college thesis?

ChatGPT is Born

We live in the age of BIG data. I mean, huge... Titanic... Galactic! (Okay, maybe not galactic.) And these poor bots, no matter how good they are at bending stuff, can't possibly process every byte and bit in one go. That's where transformers and their attention mechanisms come in.

Transformers (in deep learning, not on Cybertron) are the type of brain used by ChatGPT, so it can process textual data in a specific, efficient manner. They're unexpectedly awesome for handling diverse tasks, and they certainly excel in their ability to process vast amounts of written language.

Pre-Training and the Role of Attention

Ever wondered what ChatGPT’s baby phase looked like? Or its "terrible twos"? That age range encompasses its pre-training phase. And guess what the favorite toy in its playpen was? You nailed it! Uniqua! No, just kidding. But if you guessed that, you just exhibited a keen sense of attention. Callbacks are great!

Anyway, moving on to this digital baby…

The Process of Pre-Training ChatGPT

ChatGPT’s brain, at this phase of its development, isn’t too different from a real baby’s: picking up random words from overheard conversations, learning the rhythm of speech, even if the meaning's still a tad fuzzy and they can’t even talk yet.

Ever play peek-a-boo with strangers your young kids/cousins? The training process for ChatGPT’s brain has its own version of peek-a-boo called "masked language modeling." Here, some words are hidden (peekaboo!), prompting our little tyke to guess the missing bits. And yes, as with toddlers, sometimes the guesses are hilariously off-mark. But every time it plays, it gets a tad better.

"But where does attention come in? Aren’t you just rehashing your last post?" I hear you cry. Bear with me: this part is more of an expansion of that last post. Anyway, attention is the discerning eye that helps ChatGPT decide which words in these vast datasets are worth... well, attending to. During pre-training, toddling little ChatGPT was set loose in a candy store. Not every sweet was gonna make it into its shopping cart (or mouth): only the shiniest, most sugary ones.

How Attention Learns from Data

In ChatGPT’s brain, attention is directed by things called self-attention and multi-head attention.

Self-attention allows ChatGPT to weigh the importance of individual words in a sentence. Think of it as emphasizing specific words when retelling a story.

Multi-head attention lets ChatGPT juggle multiple lines of thinking at once. It evaluates various aspects of a single word or phrase. Kinda like how we watch a movie: paying attention to the plot, the music, and the actors' expressions simultaneously.

This pre-training process is like a AI workout; it refines ChatGPT’s attention "muscles," helping it pick up patterns in the data. Kinda like recognizing the melody in a song after hearing it a few times. Or how your cat comes running when it hears the can opener.

Emergent Behaviors During Pre-Training

Now, here's where things start to get interesting. As ChatGPT learns and grows, unexpected quirks pop up. These emergent behaviors can be delightful insights or baffling oddities. For instance, from the vast data it trained on, ChatGPT has managed to identify certain patterns, like those between numbers and months. Other times, though, it might fixate on something offbeat. (No, ChatGPT, "banana taco" isn't a thing!).

emergent behavior: a complex pattern or phenomena that arises from the interactions of simpler elements, and which cannot be predicted solely from the properties of the individual elements

Studies on attention in pre-trained models like ChatGPT’s brain have uncovered lots of these genius moments (as well as plenty of boneheaded mistakes). They’re a riveting read for anyone into machine "psychology." It’s worth asking ChatGPT: “Briefly describe various emergent behaviors that have been found when studying transformer models?”

Can we say it’s like peering into the diary of a teenager, full of profound revelations one day and absolute nonsense the next? Yes. Yes, we can. But, you know, leave your kids’ diaries alone.

Fast Forward: ChatGPT’s all grown up

Spoiler alert: ChatGPT’s brain survived the intense pre-training process, got some more training after that—something called “reinforcement learning from human feedback” or RLHF…a subject for a later post—and now it’s out in the world, ready to show off what it can do.

ChatGPT decides to throw a party. Not just any party; it's the AI party of the decade. While some guests are just mingling around aimlessly, ChatGPT is quietly flexing its master host skills, intelligently tuning into multiple conversations, processing inputs, and responding with flair. But how does ChatGPT keep track of these myriad interactions, and how does its attention mechanism play into all this?

ChatGPT vs. Humans

Have you ever walked into a party and tried to pick up on interesting conversations? Imagine ChatGPT doing a digital version of this (super simplified, of course). Whenever you type a prompt—let's say, "Tell me about the history of pizza"—ChatGPT’s attention mechanism kicks into high gear.

ChatGPT’s transformer-based brain, begins to "attend to" (focus on) the most important words or phrases in your prompt. This "attending to" can be categorized into several emergent attention types.

Sidebar: Why is “emergent” in bold? Because ChatGPT’s brain was never explicitly told how it’s supposed to pay attention to the way words relate to each other. It figured it out all on its own. For example, after seeing many sentences with pronouns, it tries to figure out the relationship between pronouns and the things they refer to. This challenge, known as anaphora resolution, isn't always perfectly addressed, but it's one of the many things ChatGPT works on during its training.

What I’m getting at is this: all these examples aren’t specifically defined by the pre-training process; they are emergent behaviors that resulted from all the complex math happening under the hood.

Think of a starling: their brains are wired with a couple of rules:

Stay close to your neighbors, but don’t crowd them

If you can, go wherever they’re going

Avoid predators and obstacles

When a bunch of starlings get together, those three simple rules end up interacting in ways that were never included in their brain’s wiring. Their flocking behavior (a “murmuration”) isn’t innately part of their behavior. The murmuration is a more complex behavior than the sum of individual birds following these three rules.

That’s an emergent behavior: a complex pattern or phenomena that arises from the interactions of simpler elements, and which cannot be predicted solely from the properties of the individual elements.

In the case of ChatGPT, these emergent behaviors are the result of intricate interactions within its massive brain and

galactictitanic amounts of training data, rather than a set of simple predefined rules.Let me state that once more: These categories aren’t specifically defined, but are best-guesses at the type of “attention” that ChatGPT incorporates. Not just mine, either—lots of folks have written papers about this, and more are being written every day.

Let’s go back to the party, and see how ChatGPT’s attention mechanisms make it such an amazing host:

Syntactic Attention: This is the grammar police of the party. When ChatGPT focuses on the structure of sentences, it's flexing this type of attention.

Semantic Attention: Deep thoughts, anyone? Here, ChatGPT hones in on the meaning behind words, like the philosophical guests pondering life's mysteries.

Temporal Attention: Like reminiscing about the good old days or anticipating the future. ChatGPT uses this when sequencing matters.

Hierarchical Attention: Recognizing that some topics are broader than others, just like how some party discussions range from global issues to the that guy on TikTok who made friends with a seagull.

Local Attention: Opposite of hierarchical, where ChatGPT is the gossip, focusing on minute details of a story.

Global Attention: Here, the AI pays attention to the entire input, like the social butterfly who has a sense of the entire room's vibe.

Inter-sentence Attention: When two sentences relate, and ChatGPT plays matchmaker, making sure they gel well.

Self-referential Attention: ChatGPT reflecting on its own previous responses, akin to the occasional self-absorbed guest (yes, we get it, you don’t have a TV in your house).

Cross-modal Attention: When ChatGPT tries to correlate text with other forms of data—it's like bringing together fans of jazz and rock and watching the magic unfold.

Adaptive Attention: Tailoring responses based on the prompt's nature; because let's face it, not all party conversations are created equal.

You’re thinking “big deal, I can do those things.” Sure, Jan. Can you do them all at once? Because ChatGPT doesn't just join one conversation. It engages all of them at the same time! It's not just listening; it's multi-tasking, interpreting, and engaging on multiple fronts.

Sidebar: Let’s get slightly nerdy…

Yes, metaphors are great, but I’ve been eliding a lot of complex math that is going on behind the scenes.

During pre-training, ChatGPT played a game of peek-a-boo with sentences (remember masked language modeling from earlier?): some words were hidden and it had to guess them. When it guessed right, it became more confident in the way it was paying attention to the other words to make that guess. When it guessed wrong, it adjusted how it focused its attention, thinking about which words might be more important next time.

After pre-training was over, ChatGPT didn't just have a fixed weight for each word. Instead, for every new sentence, it dynamically recalculates how much attention each word should get, drawing from patterns it learned in the past. It earned a lot of “confidence” in its past guesses, after all! The attention it gives is influenced by the patterns and relationships it saw during its peek-a-boo training.

What makes ChatGPT so useful is how ChatGPT’s attention influences its completion output. At this point of the party, ChatGPT steps into the kitchen. It attends to all those conversations at once, remembering anecdotes and stories, and recalling guests’ preferences for food. All that data helps it decide the ingredients for each dish, its attention mechanism influencing the presentation, cuisine type, and taste of the dish.

From the moment ChatGPT reads your prompt until it’s done generating its completion, it’s using that emergent attention mechanism

Wait…Until It’s Done Generating Its Completion?

Yeah. It’s pretty mind-blowing.

Back at the party, as ChatGPT’s meal prep progresses, it will (naturally) start adding ingredients to a dish. As soon as those new ingredients are added, ChatGPT's attention evolves. Sure, it had an idea what is was going to do when it walked into the kitchen…but with every dash of salt, every sprig of cilantro, every cut of beef, its attention is redirected and refocused. As it places the perfectly-cooked steak onto the plate, it remembers something from its vast training data: a steak often pairs with a pat of butter! Now, it didn’t walk into the kitchen with a pre-fixed recipe. But its training—including the countless recipes and food articles it was exposed to—taught it that butter makes everything better.

Where “Prompt Engineering” Goes Wrong

There are a lot of folks out there doing “prompt engineering” online. Some of them even charge for basic templates that, really, anyone should be able to write. (Don’t worry, if you can’t figure out how to write good prompts, subscribe to this Substack, because I’ll be teaching you.)

Most folks make three big mistakes when writing a prompt.

Unclear or Ambiguous Wording

Optimizing away potential linguistic ambiguity is the single best thing you can do…

Remember, ChatGPT was trained on vast quantities of text from all over the internet. If you make up an abbreviation, and it’s something that wasn’t seen a lot during that training, it’s not going to know how to attend to it.

Look: when the ancestor of ChatGPT’s brain was created (the first transformer model), it resulted in perhaps the most important paper in the world of large language models. It was called “Attention Is All You Need” and it says it right there in the title: you need attention. If ChatGPT doesn’t know how to pay attention to what you wrote, you’re wasting space in your prompt.

The same goes with ambiguous words or instructions. Let’s say you have a prompt that includes:

What is photosynthesis? Give me a comprehensive answer.

Well, you haven’t given it much to go on. Let’s dig into the problems:

“What is photosynthesis?” itself is pretty broad. The “lexical” attention heads in ChatGPT don’t really know what you mean by what is. Humans can probably sort it out—we’d likely have more context available to us about why someone is asking that question—but machines have trouble with such a vague request. Do you mean you want a simple definition? A detailed explanation? Maybe you really want to compare it to cellular respiration? Who knows?! ChatGPT has probably been trained on a lot of text that answers that exact question, and all of them have varied levels of detail. For many topics, it might go so far into the weeds (heh) that you are overwhelmed with its answer. For others, it might not go deep enough. Let’s see how different attention heads might read that sentence:

Syntactic Attention: this attention head would likely pay attention to what is, recognizing that it’s following the common syntax of a question.

Semantic Attention: here, the attention mechanism would try to guess what you mean by photosynthesis, and recognize that it’s a biological term related to plant life.

Local Attention: Oops. There are only three words here. Not much context for it to know what it’s supposed to pay attention to.

Global Attention: Another oops: There are only five words in the next sentence, and no words before it. Global attention evaluates the entire context, so since it hasn’t yet begun to answer your question, its global context only has these two sentences to determine where its attention is supposed to be directed.

“Give me a comprehensive answer.” Seems straightforward, but…it’s not. Sure, it might lead to a more verbose answer, but without being given meaningful direction for depth and breadth, ChatGPT is more likely to cast a wide net on the topic. Not to mention, comprehensive is pretty subjective on its own.

Syntactic Attention: the imperative form of the prompt can be confusing on its own, because that sentence would have to rely on other attention heads to figure out what you are referring to.

Imperative Attention: if an “imperative attention” head was an emergent property (you can’t predict emergent properties, remember!) it would have trouble reconciling the imperative verb phrase give me with the direct object a comprehensive answer. Why? Because…

Semantic Attention: As I mentioned, comprehensive is a pretty subjective term. Without more qualification, its ability to attend to the semantic meaning of the word is dulled.

A reminder: while I’m describing certain attention heads as "Syntactic" or "Semantic" for illustrative purposes, in the actual model these heads work in a more distributed and complex manner. Studies have found these sorts of emergent behaviors in ChatGPT, but like starling murmurations, nobody can be 100% certain.

Optimizing away potential linguistic ambiguity is the single best thing you can do to improve how ChatGPT is able to attend to the text in your instructions. How would I optimize that prompt?

Please elucidate the purpose of photosynthesis and provide a detailed breakdown of its stages.

See the difference? That’s a damn lexical bonanza right there. It’s more specific, so ChatGPT can direct its attention heads to hone in on the precise nuances of my intent—elucidating the purpose and detailing the stages of photosynthesis. The specificity and lexical density of this prompt helps ChatGPT’s attention heads allocate their focus to both segments of the request, making it much more likely to respond in a way that is useful to me.

Gibberish Prompts That Seem To Work

Here’s an extract from a very popular prompt that you may have seen:

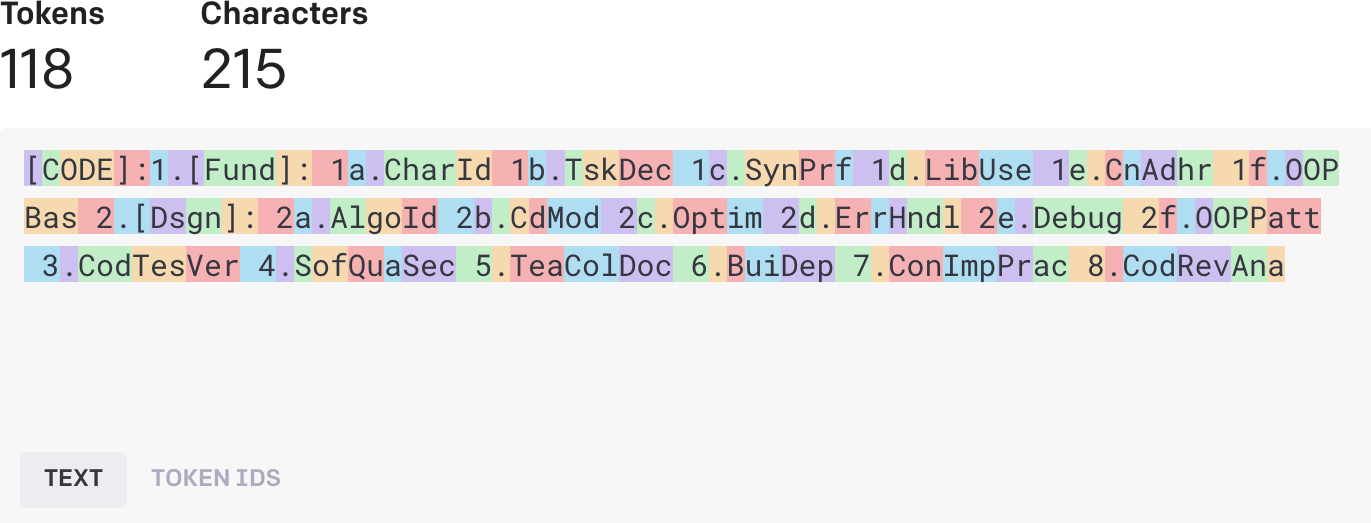

[CODE]:1.[Fund]: 1a.CharId 1b.TskDec 1c.SynPrf 1d.LibUse 1e.CnAdhr 1f.OOPBas 2.[Dsgn]: 2a.AlgoId 2b.CdMod 2c.Optim 2d.ErrHndl 2e.Debug 2f.OOPPatt 3.CodTesVer 4.SofQuaSec 5.TeaColDoc 6.BuiDep 7.ConImpPrac 8.CodRevAna

There’s…just…oh lord.

Don’t get me wrong, sometimes ChatGPT can astound you in its responses using that kind of prompt. But—and this is a promise—compressed and gibberish prompts are never going to beat plain English prompts.

This kind of gibberish “compression” has been making the rounds for a while. Some of them even use emoji to further “compress” words. There are…many problems with prompts like this. Let me take you through two of them.

Semantics

You might’ve picked that one out already, having gotten to this point of this article. ChatGPT’s attention mechanism has evolved to learn how to attend to plain English words. CdMod is most decidedly not a plain English word. Don’t trust me; ask the expert:

Me: What semantic meaning could "CdMod" have when used in a prompt to ChatGPT?

ChatGPT: […] "CdMod" isn't a recognized abbreviation or term with a specific semantic meaning […]

Now, if given the entire extract of the gibberish above (or even the entire gibberish prompt from its source), ChatGPT will give you a better answer about what CdMod means, but we remain with the same problem.

When ChatGPT encounters a word that it hasn’t seen a lot in its training, it is easily confused about its meaning. Sometimes, you’ll luck out. Other times, it won’t return the kind of depth and usefulness that you were expecting. It’s a crapshoot. So why confuse it?

Token Length

I haven’t really talked about “tokens” yet in this article, so here’s the shorthand definition: some words in ChatGPT’s “dictionary” are split into multiple parts, called tokens. ChatGPT can only keep a limited number of tokens in its memory (its “context”) at one time, so reducing the number of tokens used in a prompt is important. Think of the context as your token budget. You want to spend it in a way that maximizes results, right?

It’s important to balance your token budget with ChatGPT’s ability to infer meaning from your prompt, but in the case of compressed prompts like these, it’s the worst of both worlds.

The token budget is being wasted on abbreviations that ChatGPT can’t understand.

When I put it into OpenAI’s Tokenizer, that little snippet at the top of this section weighs in at 118 tokens. Might not seem like much, but remember, there is absolutely no meaningful semantic relevance to those abbreviations when ChatGPT first reads it.

What are the tokens that ChatGPT sees?

How would I fix that? I’d dump all the compressed stuff and use actual words that ChatGPT can attend to. Like this: (Sorry, Substack’s code blocks are giant, so I put it in a screenshot, but the code is there in the ALT text.)

Look at all those words. Surely that’s killing the token budget, right?

Nope.

In fact, it uses 27 fewer tokens. Yes, it’s more characters. And admittedly, character counts matter when you’re using ChatGPT’s online “Custom Instructions” feature. Then again, you’ve got 1,500 characters for custom instruction, and ChatGPT itself doesn’t care about letter counts anyway: it only cares about the number of tokens.

Why did I make some things lowercase? Some words, depending on their capitalization and context, might be split into multiple tokens. It's a quirk of how ChatGPT has been trained. As for the markdown formatting, ChatGPT has seen tons of markdown in its training. It's well-acquainted with headings, bullets, and numbered lists. Using markdown can be a familiar way for ChatGPT to interpret instructions. Even adding markdown **bold** to a word can emphasize it, and it only costs a couple of extra tokens (one for each pair of **).

I think I’ve made my point here. I’ll move on to the third mistake, and bring this monstrous article to a close.

Asking Too Much

This one’s a short one, but I see it all the time.

ChatGPT will only respond with so much text before it stops. If you ask two questions in one prompt, it can cause a couple of issues.

Dilution of Response: ChatGPT might not handle both questions effectively, leading to answers that aren't as comprehensive as you'd like.

Output limit: Remember, ChatGPT isn’t verbose by default. It can't just go on and on, so it has to split its response across both your questions.

The solution? Keep it simple. Ask one question at a time and follow up with another if needed. If the questions are unrelated, consider resetting the context or starting a new chat session. That way, ChatGPT can focus on giving you the best answer for each question without getting sidetracked, and without mixing the context of one question into the response to a different one.

Wake Up. It’s Over.

Phew! Thanks for hanging in there. This is the type of long-form writing that I love to do, and I’m always thrilled to share my insights. Knowledge shouldn’t be hoarded, so if I can help even a handful of folks get savvier with tech like ChatGPT, mission accomplished.

Stick around, because in the next post, I’ll reveal my top-tier “custom instructions” for ChatGPT. Spoiler: they’re kinda amazing.

Yeah, that’s a brag. I admit it. I promise, they’re worth it.

Until then…

Good reads are meant to be shared. Don't hold back!

Don’t miss out on the next deep dive. Subscribe for instant updates!

Big news! Starting next week, I'm rolling out premium features for my paid subscribers. Dive deeper with exclusive articles, amazing task-focused prompts, and insights only available to my paid members.

But here's the cherry on top: If you sign up as a “Pioneer” tier member, you won't just get my premium content—you'll also gain direct access to ME on Substack chat. Every day, I'll be there to discuss, educate, and explore the fascinating world of large language models, ChatGPT, and all the latest generative text buzz. If you've been enjoying my writing so far, this is your chance to take it to the next level. Join me on the LLM Frontier as a Pioneer, and help ensure that quality posts like this keep flowing!

can't believe you referred to my cat as an "it"